Modernizing Classic Data Warehousing with BigQuery

Introduction

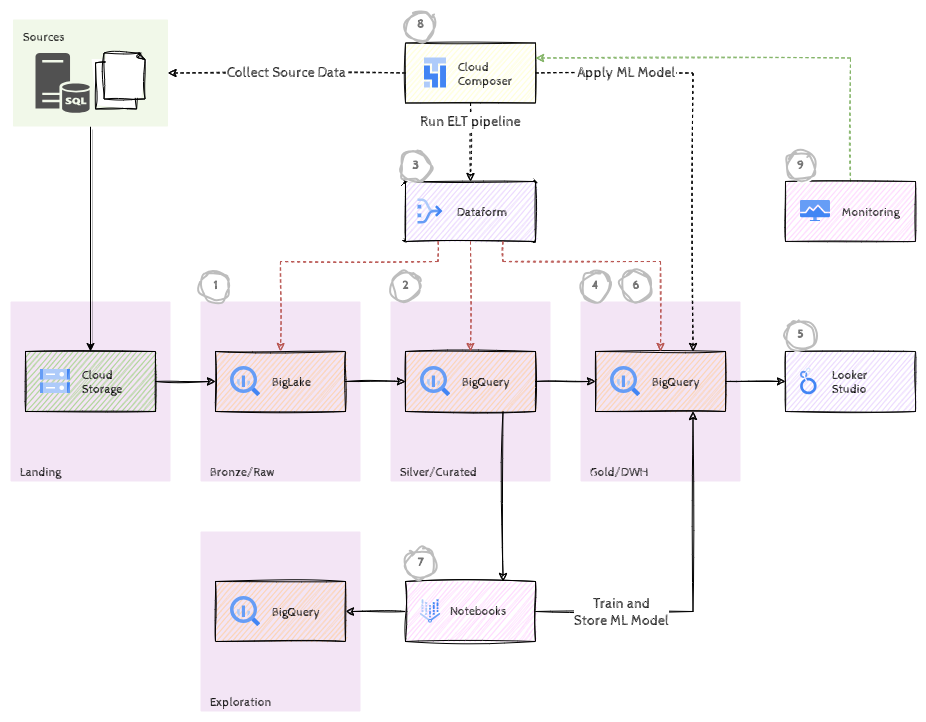

In this hack we’ll implement a classic data warehouse using modern tools, such as Cloud Storage, BigQuery, Dataform, Looker Studio and Cloud Composer. We’ll start with a modified version of the well known AdventureWorks OLTP database (a sample database that contains sales data for a fictitious, multinational manufacturing company that sells bicycles and cycling accessories) and build a dimensional model to report on business questions using a BI visualization tool.

In our scenario, the data has already been copied from the database to a landing bucket in Cloud Storage as CSV files. In the first challenge we’ll create BigLake tables in BigQuery to make that data accessible in BigQuery. In the second challenge we’ll apply some basic cleansing and transformations to load the data into staging tables. In the third challenge we’re going to automate this process using Dataform. The fourth challenge is all about creating the dimensional model and the fact table. And in the fifth challenge we’ll introduce the OBT concept and use Looker Studio to build reports. In the sixth challenge we’ll add some basic security to our data, the seventh challenge is for the data scientists, using interactive notebooks to analyze data and train ML models and finally, we’ll automate, orchestrate and monitor the whole process by tapping into Cloud Composer and Cloud Monitoring in the last two challenges.

Learning Objectives

In this hack, you will explore and learn about the following concepts:

- BigQuery as a classic Data warehouse

- BigLake for accesing data in an object store and applying table semantics

- Dataform for automating data transformation steps

- Dimensional modeling with a star schema

- Access control to data through dynamic data masking & row level security

- Looker Studio for visualizing data

- Python notebooks for data exploration

- Cloud Composer for orchestration

- Cloud Monitoring for monitoring and alerting

Challenges

- Challenge 1: Loading the source data

- Challenge 2: Staging tables

- Challenge 3: Dataform for automation

- Challenge 4: Dimensional modeling

- Challenge 5: Business Intelligence

- Challenge 6: Access control

- Challenge 7: Notebooks for data scientists

- Challenge 8: Cloud Composer for orchestration

- Challenge 9: Monitoring the workflow

Prerequisites

- Basic knowledge of GCP

- Basic knowledge of Python

- Basic knowledge of SQL

- Access to a GCP environment

Contributors

- Murat Eken